In birding, a Big Year is seeing or hearing as many different species of birds as possible in a calendar year. In databases, Big Data is something that cannot be defined that clearly.

You can read and try to understand the definition of Wikipedia. Up to you. What I like most is what Christo Kutrovsky says: “Big Data is actually a license issue. It is partially a license issue – Oracle Database is expensive and MySQL isn’t good at data warehouse stuff. It is partially a storage and network issue of scaling large volumes of data, locality of data is becoming more critical.”

Gwen Shapira wrote a very interesting article entitled Oracle Database or Hadoop?. What she (generally) says is not only interesting and joy to read but most of all true: whenever I tell an experienced Oracle DBA about Hadoop and what companies are doing with it, the immediate response is “But I can do this in Oracle”.

And she goes on: “Just because it is possible to do something, doesn’t mean you should.” Yes, and the reason I would say, is that databases are not always in good shape. And why so, one might ask. This is the reason of this blog post.

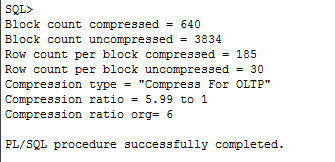

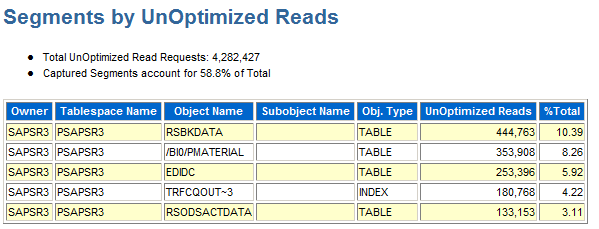

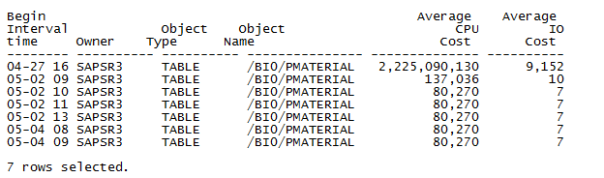

Reason #1: Databases are very seldom reorganized. It is a very hard task to explain to non-DBAs why this is necessary. Only core/system DBAs comprehend the real benefits of that reorganization. It it an extremely important task that is so often neglected.

Reason #2: Old data is never removed or archived. I dare say that most of the data (more than 80%) in all databases I have seen is rarely touched. Call is junk data, big data, whatever you like.

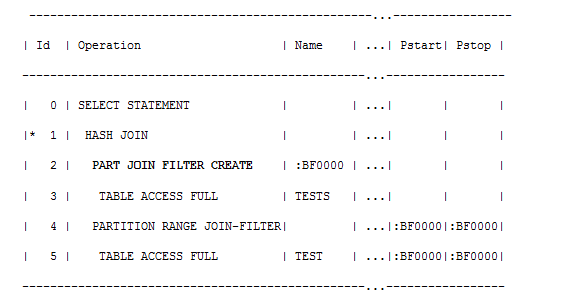

Reason #3: Databases are not upgraded as new databases. Meaning, I seldom see that a new 11g database is created and the database is imported from the old database. Often migration scripts are being run (easy to do so and minimal downtime) and what you are left with is a fake 11g database. It almost has the Data Dictionary of 11g but modified with scripts. Such databases underperform in most cases.

Reason #4: Patching the databases to the latest possible level. How often I am asked this question: “But Julian, how can one digit at the end matter?”. Well, it matters a lot in most cases.

Reason #5: Automation of several critical tasks is not used at all. One very good example is AST (Automatic SQL Tuning).

So, what is the way ahead if ignoring all of the above? You can decide after all if the following from Future DBA? 5 reasons to learn NOSQL are valid ones to learn NoSQL:

1. Big Data and Scaling

2. Crashing servers, not a problem.

3. Changes aren’t as stressful

4. Be NoSQL Pioneer

5. Work less

Do you believe in Tall Stories? Big Data, Big Problems, Little Talent!

Is Big Data just a trend or is it just another way to solve the big problems we have created over time? Should we concentrate our efforts on “smart use of data” or on “use of big data”?

“Depending on whom you ask, ‘big data’ is either:

A. Bullshit (Brad Feld)

B. No substitute for judgment (David Friend)

C. The marriage of corporate data with external data (Chris Lynch)

D. Data that’s growing faster than Moore’s law (Richard Dale).

After this week, my answer would be E, all of the above.”–Gregory T. Huang, Xconomy

Try the following: type in Google “Big data is” and see if you get what I did:

What happened to machine learning, computational intelligence and data mining?

It is interesting to note that the database conference with highest H-Index is the one on Very Large Databases.